Posts By kimptoc

Things I love and hate about Node.js

I thought I’d try one of those link bait articles…

LOVE

- Threads/no threads – since everything runs in the same thread, but written as if there are many threads via callbacks, it makes for easy multi-threaded apps. Just make sure you keep things simple, using tools like asyncjs to control multiple callbacks- you dont want a callback pyramid of doom!

- Lightweight/fast – its a VM based language like Java and C#, but is already very fast to start.

- Same language client/server – less brainfarts when switching between code for the front end and the back end

- Dynamic – no need to tell the compiler what type things are, it works out what you mean.

- Its new and cool :)

HATE

- Its Javascript – there seem to be lots of “bad parts” to the language. Thus I code my Javascript via Coffee Script :)

- OTT braces and semi-colons –

function() { var a=1; }. Probably this is hang up from seeing too much Java/C#

- Function based programming – and not in a Lisp like way – every other line seems to be a new function definition.

- Variable declarations – declarations get pulled up to the top of the block, so things might not work as you expect

- == versus === – see the bad parts link above :(

- No types – especially in function parameters. So you can get problems when you miss a parameter and so the function is doing the wrong thing with the wrong parameter…

I am still in the “honeymoon” phase – its benefits seem to outweigh the disadvantages. Lets see what happens over the next 6-12 months…

Close scrapes with Ruby / Mechanize

A few years ago I agreed to help with a side project that needed to scrape some websites and also talk to some webservices.

Various front ends would generate the requests and my process would go through the db and process them.

The core of the engine was not a webapp – but Rails/ActiveRecord (AR) provided a good way of interacting with MySQL.

I tried a few threading strategies, but hit (seemingly) issues with accessing the db and MRI threading, although in retrospect, I think the issues were more of my own making – overlapping threads/poor design.

Moving to JRuby seemed to address some of the threading issues.

I initially used the Parallel gem, which seemed to do largely what I wanted. However I still was getting AR issues, so I switched to JRuby. It then seemed more appropriate to use a Java based parallelisation gem – so I went for ActsAsExecutor, which is thin wrapper around the Java concurrency features. This was used to manage a pool of threads that can handle the scraping/webservice calling

ActsAsExecutor::Executor::Factory.create 15, false # 15 max threads, schedulable-false?

To do the polling for any work to be done, the Rufus scheduler gem was used. It was setup to check for pending records every few seconds, like so:

scheduler = Rufus::Scheduler.start_new scheduler.every "2s", :allow_overlapping => false do # do some work end

This was kicked off in a Rails initializer.

One mistake I made was to not have the allow_overlapping flag – which meant that if any job was slow, the next one would be started and would try to do the same work again.

Another trick which I think helped was to wrap db accessing sections like so:

ActiveRecord::Base.connection_pool.with_connection do # db work... end

The scheduled task analyses each request and generates work items to be done in the thread pool. Another mistake I made was to set the work items status to NEW and then separately, re-queried the db for NEW items to queue up for the thread pool. Only when they were picked off the queue did their status advance. This led to a window of opportunity for a subsequent scheduled job/task analysis to re-queue the same items. The change to address this was to not re-query the db – I am generating the items and so know them without going to the db. Thus subsequent runs will only work on items that they generate themselves.

Each work item in the thread pool did the above trick to re-connect to the DB and then loaded their work item.

To separate the various webscraping/webservice calls out, the code for that is held in a text field in the DB. This is then loaded dynamically as each call is required. This is “instance_eval”‘d into the work item object – so it has access to work item details.

There are largely 2 kinds of work items – web scrapers and webservice calls. The scraping is done via Mechanize and the webservices via Savon.

For Mechanize, the various pages/frames/forms are navigated to achieve the desired results.

For Savon, the message body is constructed and the service called.

The results are then saved back to the db.

Samsung Smart TV Dev – my progress so far…

In late 2011/early 2012, I volunteered to have a go at producing a Samsung TV app – a fairly basic videocast player app for a certain podcast network. As the technology used is HTML/JavaScript – it seemed interesting.

Given Samsung provide a sample app, it seemed to be an easy job. To make it a little more complicated, I wanted to provide an app covering several rss feeds. So the idea was a “channel selector” page which took you to an episode list for the selected channel, where you could watch an episode.

Painfully, the actual video playing is done via custom Samsung code – so its not possible to fully develop in a browser environment. You have to use the Samsung emulator.

So, the core of the app was developed in Coffeescript, using middleman to generate a static site to run inside the Samsung TV emulator. I used the serenade.js front end framework – sort of like Backbone. To make the site flexible without having to release new versions via Samsung, a separate “config” site was used – this defined what podcast feeds to include, logos for them etc. KeyboardJS was used to manage keyboard input – and make it fairly easy to switch between browser and emulator.

Unfortunately (?) the SDK is still being developed by Samsung, so code that worked initially, then broke when a new version of the SDK came out.

The latest version of this app is available on github – here and some sample config to drive the app.

Given the SDK issues, I reverted to doing a more basic app, that just played one feed. This has progressed to the point that its had a few reviews with Samsung – I just need to get the time to sort out the last few items. Its also a middleman based app (though its missing a Gemfile – doh). The idea is that its a template for producing several apps – not as good from a user experience, but at least its minimal effort from a development perspective.

As I didnt have any ‘real’ Samsung kit to test it on, I have had to rely on the emulator, which worked fairly well – despite having to be run no Windows :(

Rails Engine to produce a photography portfolio/brochureware site.

I was asked to provide a flexible photography portfolio site – brochure type site.

Given Rails was on my “fad” list, I decided to have a go that way.

What I came up with was a ‘generic’ Rails Engine to do the work of pulling the galleries etc together. This was then used inside a specific Rails app which just had the photos/galleries/comments. The aim being that the site owner can arrange things as they want and then do a commit/push to heroku to update their site.

The Engine is open source, available on github here.

Its a bit dated now, using Rails 3.0.9 – although should be easy to update, as its quite simple :) – the Gemfile only has Rails, Capybara and sqlite3 – not sure why the last 2 are there – as no DB is used.

The controllers do the work of responding to various user requests – using the folder/image structure found in the host app to define the site structure.

In the model directory, there are several classes. photo – is used to wrap each photo image, including a related thumbnail and caption. project – wraps a directory, tracking what images are in it. site_config – this handles parsing the overall site configuration, which is held in a YAML file in the host Rails app.

The lib directory defines the basic engine configuration – how it hooks into the host app.

Under test/dummy there is a minimal rails app using the engine – for testing. Although I cant see a sample config file – perhaps it relied on the defaults :)

At the time, it seemed a good way to produce the site. I’ve not had to use it again yet, and that will be the test of how ‘generic’ it is…

Ruby via the backdoor…

At work, our team does not do much development – we support a third party’s product. Most work is around the edges, integrating it with the rest of the systems.

Some of that work is in Java, but recently I had a chance to sneak in a little Ruby (or JRuby to be specific).

Why Ruby/JRuby? To me, the main reason is that its a more succinct way of expressing the program logic and combined with the JVM integration features of JRuby, it was an obvious choice.

The premise was we wanted to make the extension of a component flexible/scriptable and ruby seemed like a good fit.

Here are some of the highlights of what was done…

The requirement was to publish data from one source to a webservice.

The initial cut was a pipeline of classes that transformed the source data into target format and then calling the webservice. The problem with this solution was that the webservice was very slow – only able to handle around 16 calls per second – compared to the 100 messages per second that were coming from the source.

This was addressed by adding some threading via calling out to Java’s concurrent utilities

A threadpool was created (num_threads specifies number of threads to run):

@exec = java.util.concurrent.Executors.new_fixed_thread_pool $props["im.num_threads"].to_i

And then for each message that came in, its handling is passed to the threadpool

@exec.execute do ... # do work, happens in a separate thread end

This meant we could hammer the webservice with a lot more calls, however the downside is that we need to be wary of concurrent issues.

Some of the processes in the pipeline were completely standalone and so were wrapped in Java ThreadLocal objects.

@time_formatter = $jruby_obj.threadLocal { FormatTime.new }

Where the process was not standalone, but had shared data structures, then a mutex was used to ensure only one process accessed the structure at a time.

# create the mutex object - once for the shared object @mutex = Mutex.new ... # then when we need to control access to some data we do this @mutex.synchronize do ... end

Now, by specifying 20-30 threads, were do 100+ calls per second to the webservice :)

Cassandra … as a Service

Cassandra is a database designed for scalability and high availability, without compromising on performance.

Is that what you are looking for? But want to avoid the headache of setting it up? Of maintaining it?

Then you might be interested in this new Cassandra as a Service option

Follow the above link and register your interest.

Hemocyl (part 2)

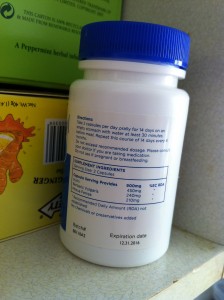

The site was updated to use a secure link for payments, so I decided to take the leap and buy it. Packet arrived today.

The directions are to take 2 per day for 2 weeks and that should cover you for 6 months. If you don’t hear from me again, I blame the tablets…

UPDATE:

One week into taking them, still alive :). I “think” they seem to be helping – the discomfort generally seems to be reduced. Obviously still a long way to go before we know if they last for 6 months.

Hemocyl – is it legit?

Wonder if there are any reviews by real people using Hemocyl out there? Everything found so far are just adverts.

NOTE: The purchase process is NOT secure, ie not https – that rings alarm bells for me – and not even a paypal option.

Article in London Evening Standard. Not sure its factually correct – I dont think you take 2 tables twice a year…

Yahoo Question on it – here

Anyone interested in a “shared purchase”, eg pay a quarter/sixth each, we each get a month or so to try it out?

@kimptoc

Ebbsfleet / MyFootball Club – needs a little help

Ebbsfleet United needs a little help

Hence this pledge:

http://www.pledgebank.com/2011playngbudget

To donate (£21.73), use this paypal link:

Thank you!

MySQL and Postgres quick start…

Every 6-12 months I need to setup a new mysql and/or postgres database – and by then have forgotten how to do it (again). So hopefully by writing it here, I will remember or at least know where to go in future.

Assumed this is on Ubuntu… and that mysql/postgres installed already.

MYSQL

Create the database

sudo mysqladmin create [dbname]

Create a user for that database, using the mysql tool (user has no password, can only logon from localhost)

sudo mysql

mysql> GRANT ALL PRIVILEGES ON [dbname].*to '[new user]'@'localhost';

POSTGRES

Create the db

createdb [dbname]

Create the user

sudo -u postgres createuser –superuser [new user]

As usual, work in progress, to be updated/added to as I learn a little/need more.